Image Cropper And Uploader

- state controller

- image uploads

- aws

- db

- s3

- cloudfront

- rekognition

- rate limiting

27 min read 5953 words

Sign in to use the demo or view

<script lang="ts">

import { fade } from 'svelte/transition';

import { FileInput } from '$lib/components';

import { ImageCrop, UploadProgress, ImageCardBtns, ImageCardOverlays } from '$lib/image/components';

import { objectStorage, CropImgUploadController } from '$lib/object-storage/client';

import ConfirmDelAvatarModal from './ConfirmDelAvatarModal.svelte';

import { updateAvatarCrop } from './avatar/crop.json/client';

import { getSignedAvatarUploadUrl, checkAndSaveUploadedAvatar, deleteAvatar } from './avatar/upload.json/client';

import { MAX_UPLOAD_SIZE } from './avatar/upload.json/common';

interface Props {

avatar: DB.User['avatar'];

onNewAvatar: (img: DB.User['avatar']) => void | Promise<void>;

onCancel: () => void;

}

const { avatar, onCancel, onNewAvatar }: Props = $props();

const controller = new CropImgUploadController({

saveCropToDb: updateAvatarCrop.send,

delImg: deleteAvatar.send,

getUploadArgs: getSignedAvatarUploadUrl.send,

upload: objectStorage.upload,

saveToDb: checkAndSaveUploadedAvatar.send,

});

if (avatar) controller.toCropPreexisting(avatar);

else controller.toFileSelect();

const s = $derived(controller.value);

let deleteConfirmationModalOpen = $state(false);

</script>

{#if s.state === 'file_selecting'}

<FileInput

onSelect={async (files: File[]) => {

const erroredCanceledOrLoaded = await s.loadFiles({ files, MAX_UPLOAD_SIZE });

if (erroredCanceledOrLoaded.state === 'uri_loaded') erroredCanceledOrLoaded.startCrop();

}}

accept="image/jpeg, image/png"

/>

{:else}

<div class="relative aspect-square h-80 w-80 sm:h-[32rem] sm:w-[32rem]">

{#if s.state === 'error'}

<ImageCardOverlays

img={{ kind: 'overlay', url: s.img.url, crop: s.img.crop, blur: true }}

overlay={{ red: true }}

{onCancel}

errorMsgs={s.errorMsgs}

/>

{:else if s.state === 'cropping_preexisting'}

<ImageCrop

url={s.url}

crop={s.crop}

onSave={async (crop) => {

const erroredCanceledOrCompleted = await s.saveCropValToDb({ crop });

if (erroredCanceledOrCompleted.state === 'completed') await onNewAvatar(erroredCanceledOrCompleted.savedImg);

}}

/>

<ImageCardBtns {onCancel} onNew={controller.toFileSelect} onDelete={() => (deleteConfirmationModalOpen = true)} />

<ConfirmDelAvatarModal

bind:open={deleteConfirmationModalOpen}

handleDelete={async () => {

const erroredCanceledOrCompleted = await s.deleteImg();

if (erroredCanceledOrCompleted.state === 'completed') await onNewAvatar(erroredCanceledOrCompleted.savedImg);

}}

/>

{:else if s.state === 'db_updating_preexisting'}

<ImageCardOverlays img={{ kind: 'overlay', url: s.url, crop: s.crop }} />

<ImageCardBtns loader />

{:else if s.state === 'deleting_preexisting'}

<ImageCardOverlays img={{ kind: 'overlay', url: s.url, crop: s.crop }} overlay={{ pulsingWhite: true }} />

<ImageCardBtns loader />

{:else if s.state === 'uri_loading'}

<ImageCardOverlays img={{ kind: 'skeleton' }} />

{:else if s.state === 'uri_loaded'}

<ImageCardOverlays img={{ kind: 'overlay', url: s.uri, crop: null }} />

{:else if s.state === 'cropping'}

<ImageCrop

url={s.uri}

onSave={async (crop) => {

const erroredCanceledOrCompleted = await s.loadCropValue({ crop }).uploadCropped();

if (erroredCanceledOrCompleted.state === 'completed') await onNewAvatar(erroredCanceledOrCompleted.savedImg);

}}

/>

<ImageCardBtns {onCancel} onNew={controller.toFileSelect} />

{:else if s.state === 'cropped'}

<ImageCardOverlays img={{ kind: 'overlay', url: s.uri, crop: s.crop }} />

{:else if s.state === 'upload_url_fetching' || s.state === 'image_storage_uploading' || s.state === 'db_saving'}

<ImageCardOverlays img={{ kind: 'overlay', url: s.uri, crop: s.crop }} overlay={{ pulsingWhite: true }} />

<ImageCardBtns loader />

<div out:fade><UploadProgress uploadProgress={s.uploadProgress} /></div>

{:else if s.state === 'completed'}

<ImageCardOverlays img={{ kind: 'full', url: s.savedImg?.url, crop: s.savedImg?.crop }} />

<ImageCardBtns badgeCheck />

{:else if s.state === 'idle'}

<!-- -->

{/if}

</div>

{/if}import { circOut } from 'svelte/easing';

import { tweened, type Tweened } from 'svelte/motion';

import { defaultCropValue, fileToDataUrl, humanReadableFileSize } from '$lib/image/client';

import type { CroppedImg, CropValue } from '$lib/image/common';

import type { Result } from '$lib/utils/common';

//#region States

//#region Static

export type Idle = { state: 'idle' }; // entry state

export type Err = {

state: 'error';

img: { url: string | null; crop: CropValue | null };

errorMsgs: [string, string] | [string, null]; // [title, body]

};

export type Completed = { state: 'completed'; savedImg: CroppedImg | null };

//#endregion Static

//#region Preexisting Image

export type CroppingPreexisting = {

state: 'cropping_preexisting'; // entry state

url: string;

crop: CropValue;

/**

* Update a preexisting image's crop value.

* States: 'cropping_preexisting' -> 'db_updating_preexisting' -> 'completed'

*/

saveCropValToDb({ crop }: { crop: CropValue }): Promise<Idle | Err | Completed>;

/**

* Delete a preexisting image.

* States: 'cropping_preexisting' -> 'deleting_preexisting' -> 'completed'

*/

deleteImg: () => Promise<Idle | Err | Completed>;

};

export type DbUpdatingPreexisting = {

state: 'db_updating_preexisting';

url: string;

crop: CropValue;

updateDbPromise: Promise<Result<{ savedImg: CroppedImg | null }>>;

};

export type DeletingPreexisting = {

state: 'deleting_preexisting';

url: string;

crop: CropValue;

deletePreexistingImgPromise: Promise<Result<Result.Success>>;

};

//#endregion Preexisting Image

//#region New Image

export type FileSelecting = {

state: 'file_selecting'; // entry state

/**

* Convert a file into an in memory uri, guarding max file size.

* States: 'file_selecting' -> 'uri_loading' -> 'uri_loaded'

*/

loadFiles: (a: { files: File[]; MAX_UPLOAD_SIZE: number }) => Promise<Idle | Err | UriLoaded>;

};

export type UriLoading = {

state: 'uri_loading';

file: File;

uriPromise: Promise<{ uri: string; error?: never } | { uri?: never; error: Error | DOMException }>;

};

export type UriLoaded = {

state: 'uri_loaded';

file: File;

uri: string;

/** States: 'uri_loaded' -> 'cropped' */

skipCrop: (a?: { crop: CropValue }) => Cropped;

/** States: 'uri_loaded' -> 'cropping' */

startCrop: () => Cropping;

};

export type Cropping = {

state: 'cropping';

file: File;

uri: string;

/** States: 'cropping' -> 'cropped' */

loadCropValue: ({ crop }: { crop: CropValue }) => Cropped;

};

export type Cropped = {

state: 'cropped';

file: File;

uri: string;

crop: CropValue;

/** States: 'cropped' -> 'upload_url_fetching' -> 'image_storage_uploading' -> 'db_saving' -> 'completed' */

uploadCropped(): Promise<Idle | Err | Completed>;

};

export type UploadUrlFetching = {

state: 'upload_url_fetching';

file: File;

uri: string;

crop: CropValue;

uploadProgress: Tweened<number>;

getUploadArgsPromise: Promise<Result<{ url: string; formDataFields: Record<string, string> }>>;

};

export type ImageStorageUploading = {

state: 'image_storage_uploading';

uri: string;

crop: CropValue;

imageUploadPromise: Promise<Result<{ status: number }>>;

uploadProgress: Tweened<number>;

};

export type DbSaving = {

state: 'db_saving';

uri: string;

crop: CropValue;

saveToDbPromise: Promise<Result<{ savedImg: CroppedImg | null }>>;

uploadProgress: Tweened<number>;

};

//#endregion New Image

export type CropControllerState =

| Idle

| Err

| Completed

| CroppingPreexisting

| DbUpdatingPreexisting

| DeletingPreexisting

| FileSelecting

| UriLoading

| UriLoaded

| Cropping

| Cropped

| UploadUrlFetching

| ImageStorageUploading

| DbSaving;

export type StateName = CropControllerState['state'];

//#endregion States

//#region Callbacks

type GetUploadArgs = () => Promise<Result<{ url: string; formDataFields: Record<string, string> }>>;

type Upload = (a: { url: string; formData: FormData; uploadProgress: { tweened: Tweened<number>; scale: number } }) => {

promise: Promise<Result<{ status: number }>>;

abort: () => void;

};

type SaveToDb = (a: { crop: CropValue }) => Promise<Result<{ savedImg: CroppedImg | null }>>;

type DeletePreexistingImg = () => Promise<Result<Result.Success>>;

type SaveCropToDb = (a: { crop: CropValue }) => Promise<Result<{ savedImg: CroppedImg | null }>>;

//#endregion Callbacks

export class CropImgUploadController {

#state: CropControllerState = $state.raw({ state: 'idle' });

#isIdle: boolean = $derived(this.#state.state === 'idle');

#cleanup: null | (() => void) = null;

#getUploadArgs: GetUploadArgs;

#upload: Upload;

#saveToDb: SaveToDb;

#delImg: DeletePreexistingImg;

#saveCropToDb: SaveCropToDb;

get value() {

return this.#state;

}

/** Clean up and move to 'idle' */

toIdle = async (): Promise<Idle> => {

this.#cleanup?.();

return (this.#state = { state: 'idle' });

};

constructor(a: {

getUploadArgs: GetUploadArgs;

upload: Upload;

saveToDb: SaveToDb;

delImg: DeletePreexistingImg;

saveCropToDb: SaveCropToDb;

}) {

this.#getUploadArgs = a.getUploadArgs;

this.#upload = a.upload;

this.#saveToDb = a.saveToDb;

this.#delImg = a.delImg;

this.#saveCropToDb = a.saveCropToDb;

$effect(() => this.toIdle);

}

/** Clean up and move to 'cropping_preexisting' */

toCropPreexisting = ({ crop, url }: { crop: CropValue; url: string }) => {

this.#cleanup?.();

this.#state = {

state: 'cropping_preexisting',

crop,

url,

saveCropValToDb: ({ crop }) => this.#saveCropValToDb({ crop, url }),

deleteImg: () => this.#deleteImg({ crop, url }),

};

};

#saveCropValToDb = async ({ crop, url }: { crop: CropValue; url: string }): Promise<Idle | Err | Completed> => {

const updateDbPromise = this.#saveCropToDb({ crop });

this.#state = { state: 'db_updating_preexisting', updateDbPromise, crop, url };

const { data, error } = await updateDbPromise;

if (this.#isIdle) return { state: 'idle' };

else if (error) return (this.#state = { state: 'error', img: { url, crop }, errorMsgs: [error.message, null] });

else return (this.#state = { state: 'completed', savedImg: data.savedImg });

};

#deleteImg = async ({ crop, url }: { crop: CropValue; url: string }): Promise<Idle | Err | Completed> => {

const deletePreexistingImgPromise = this.#delImg();

this.#state = { state: 'deleting_preexisting', deletePreexistingImgPromise, crop, url };

const { error } = await deletePreexistingImgPromise;

if (this.#isIdle) return { state: 'idle' };

else if (error) return (this.#state = { state: 'error', img: { url, crop }, errorMsgs: [error.message, null] });

else return (this.#state = { state: 'completed', savedImg: null });

};

/** Clean up and move to 'file_selecting' */

toFileSelect = () => {

this.#cleanup?.();

this.#state = { state: 'file_selecting', loadFiles: this.#loadFiles };

};

#loadFiles = async ({

files,

MAX_UPLOAD_SIZE,

}: {

files: File[];

MAX_UPLOAD_SIZE: number;

}): Promise<Idle | Err | UriLoaded> => {

const file = files[0];

if (!file) {

return (this.#state = { state: 'error', img: { url: null, crop: null }, errorMsgs: ['No file selected', null] });

}

if (file.size > MAX_UPLOAD_SIZE) {

return (this.#state = {

state: 'error',

img: { url: null, crop: null },

errorMsgs: [

`File size (${humanReadableFileSize(file.size)}) must be less than ${humanReadableFileSize(MAX_UPLOAD_SIZE)}`,

null,

],

});

}

const uriPromise = fileToDataUrl(file);

this.#state = { state: 'uri_loading', file, uriPromise };

const { uri } = await uriPromise;

if (this.#isIdle) return { state: 'idle' };

if (!uri) {

return (this.#state = {

state: 'error',

img: { url: null, crop: null },

errorMsgs: ['Error reading file', null],

});

}

return (this.#state = {

state: 'uri_loaded',

file,

uri,

skipCrop: ({ crop } = { crop: defaultCropValue }) => this.#toCropped({ crop, file, uri }),

startCrop: () =>

(this.#state = {

state: 'cropping',

file,

uri,

loadCropValue: ({ crop }) => this.#toCropped({ crop, file, uri }),

}),

});

};

#toCropped = ({ crop, file, uri }: { crop: CropValue; file: File; uri: string }): Cropped => {

return (this.#state = {

state: 'cropped',

crop,

file,

uri,

uploadCropped: async () => this.#uploadPipeline({ crop, file, uri }),

});

};

/** Get the upload url, upload the file, and save it to the DB.

* States: any -> 'upload_url_fetching' -> 'image_storage_uploading' -> 'db_saving' -> 'completed'

* */

#uploadPipeline = async ({

file,

uri,

crop,

}: {

file: File;

uri: string;

crop: CropValue;

}): Promise<Idle | Err | Completed> => {

/** Get the upload url from our server (progress 3-10%) */

const uploadProgress = tweened(0, { easing: circOut });

const getUploadArgsPromise = this.#getUploadArgs();

uploadProgress.set(3);

this.#state = { state: 'upload_url_fetching', file, uri, crop, uploadProgress, getUploadArgsPromise };

const getUploadArgsPromised = await getUploadArgsPromise;

if (this.#isIdle) return { state: 'idle' };

if (getUploadArgsPromised.error) {

return (this.#state = {

state: 'error',

img: { url: uri, crop },

errorMsgs: [getUploadArgsPromised.error.message, null],

});

}

uploadProgress.set(10);

const { url, formDataFields } = getUploadArgsPromised.data;

const formData = new FormData();

for (const [key, value] of Object.entries(formDataFields)) {

formData.append(key, value);

}

formData.append('file', file);

/** Upload file to image storage (progress 10-90%) */

const { abort: abortUpload, promise: imageUploadPromise } = this.#upload({

url,

formData,

uploadProgress: { tweened: uploadProgress, scale: 0.9 },

});

this.#cleanup = () => abortUpload();

this.#state = {

state: 'image_storage_uploading',

crop,

uri,

imageUploadPromise,

uploadProgress,

};

const imageUploadPromised = await imageUploadPromise;

this.#cleanup = null;

if (this.#isIdle) return { state: 'idle' };

if (imageUploadPromised.error) {

return (this.#state = {

state: 'error',

img: { url: uri, crop },

errorMsgs: [imageUploadPromised.error.message, null],

});

}

/** Save url to db (progress 90-100%) */

const interval = setInterval(() => {

uploadProgress.update((v) => {

const newProgress = Math.min(v + 1, 100);

if (newProgress === 100) clearInterval(interval);

return newProgress;

});

}, 20);

const saveToDbPromise = this.#saveToDb({ crop });

this.#cleanup = () => clearInterval(interval);

this.#state = {

state: 'db_saving',

crop,

uri,

saveToDbPromise,

uploadProgress,

};

const saveToDbPromised = await saveToDbPromise;

this.#cleanup = null;

if (this.#isIdle) return { state: 'idle' };

if (saveToDbPromised.error) {

return (this.#state = {

state: 'error',

img: { url: uri, crop },

errorMsgs: [saveToDbPromised.error.message, null],

});

}

clearInterval(interval);

uploadProgress.set(100);

return (this.#state = { state: 'completed', savedImg: saveToDbPromised.data.savedImg });

};

}import { logger } from '$lib/logging/client';

import type { Result } from '$lib/utils/common';

import type { ObjectStorageClient } from './types';

export const uploadToCloudStorage: ObjectStorageClient['upload'] = ({ url, formData, uploadProgress }) => {

const req = new XMLHttpRequest();

req.open('POST', url);

if (uploadProgress?.tweened) {

req.upload.addEventListener('progress', (e) =>

uploadProgress.tweened?.update((p) => Math.max(p, (e.loaded / e.total) * 100 * (uploadProgress?.scale ?? 1))),

);

}

const promise = new Promise<Result<{ status: number }>>((resolve) => {

req.onreadystatechange = () => {

if (req.readyState === 4) {

if (req.status >= 200 && req.status < 300) {

resolve({ data: { status: req.status } });

} else if (req.status === 0) {

resolve({ error: { status: 499, message: 'Upload aborted' } });

} else {

logger.error(`Error uploading file. Status: ${req.status}. Response: ${req.responseText}`);

resolve({ error: { status: req.status, message: 'Error uploading file' } });

}

}

};

});

req.send(formData);

return { abort: req.abort.bind(req), promise };

};import { croppedImgSchema, type CroppedImg } from '$lib/image/common';

import type { z } from 'zod';

export type UpdateAvatarCropReq = Omit<z.infer<typeof croppedImgSchema>, 'url'>;

export type UpdateAvatarCropRes = { savedImg: CroppedImg };import { ClientFetcher } from '$lib/http/client.svelte';

import type { RouteId } from './$types';

import type { UpdateAvatarCropRes, UpdateAvatarCropReq } from './common';

export const updateAvatarCrop = new ClientFetcher<RouteId, UpdateAvatarCropRes, UpdateAvatarCropReq>(

'PUT',

'/account/profile/avatar/crop.json',

);import { db } from '$lib/db/server';

import { jsonFail, jsonOk, parseReqJson } from '$lib/http/server';

import { croppedImgSchema } from '$lib/image/common';

import type { UpdateAvatarCropRes } from './common';

import type { RequestHandler } from '@sveltejs/kit';

const updateAvatarCrop: RequestHandler = async ({ locals, request }) => {

const { user } = await locals.seshHandler.userOrRedirect();

if (!user.avatar) return jsonFail(400, 'No avatar to crop');

const body = await parseReqJson(request, croppedImgSchema, { overrides: { url: user.avatar.url } });

if (!body.success) return jsonFail(400);

const avatar = body.data;

await db.user.update({ userId: user.id, values: { avatar } });

return jsonOk<UpdateAvatarCropRes>({ savedImg: avatar });

};

export const PUT = updateAvatarCrop;import { z } from 'zod';

import { cropSchema, type CroppedImg } from '$lib/image/common';

import type { Result } from '$lib/utils/common';

export const MAX_UPLOAD_SIZE = 1024 * 1024 * 3; // 3MB

export type GetSignedAvatarUploadUrlRes = { url: string; objectKey: string; formDataFields: Record<string, string> };

export const checkAndSaveUploadedAvatarReqSchema = z.object({ crop: cropSchema });

export type CheckAndSaveUploadedAvatarReq = z.infer<typeof checkAndSaveUploadedAvatarReqSchema>;

export type CheckAndSaveUploadedAvatarRes = { savedImg: CroppedImg | null };

export type DeleteAvatarRes = Result.Success;import { ClientFetcher } from '$lib/http/client.svelte';

import type { RouteId } from './$types';

import type {

GetSignedAvatarUploadUrlRes,

CheckAndSaveUploadedAvatarRes,

CheckAndSaveUploadedAvatarReq,

DeleteAvatarRes,

} from './common';

const routeId: RouteId = '/account/profile/avatar/upload.json';

export const getSignedAvatarUploadUrl = new ClientFetcher<RouteId, GetSignedAvatarUploadUrlRes>('GET', routeId);

export const checkAndSaveUploadedAvatar = new ClientFetcher<

RouteId,

CheckAndSaveUploadedAvatarRes,

CheckAndSaveUploadedAvatarReq

>('PUT', routeId);

export const deleteAvatar = new ClientFetcher<RouteId, DeleteAvatarRes>('DELETE', routeId);import { db } from '$lib/db/server';

import { jsonFail, jsonOk, parseReqJson } from '$lib/http/server';

import { objectStorage } from '$lib/object-storage/server';

import { createLimiter } from '$lib/rate-limit/server';

import { toHumanReadableTime } from '$lib/utils/common';

import {

MAX_UPLOAD_SIZE,

checkAndSaveUploadedAvatarReqSchema,

type DeleteAvatarRes,

type CheckAndSaveUploadedAvatarRes,

type GetSignedAvatarUploadUrlRes,

} from './common';

import type { RequestHandler } from '@sveltejs/kit';

// generateS3UploadPost enforces max upload size and denies any upload that we don't sign

// uploadLimiter rate limits the number of uploads a user can do

// presigned ensures we don't have to trust the client to tell us what the uploaded objectUrl is after the upload

// unsavedUploadCleaner ensures that we don't miss cleaning up an object in S3 if the user doesn't notify us of the upload

// detectModerationLabels prevents explicit content

const EXPIRE_SECONDS = 60;

const uploadLimiter = createLimiter({

id: 'checkAndSaveUploadedAvatar',

limiters: [

{ kind: 'global', rate: [300, 'd'] },

{ kind: 'userId', rate: [2, '15m'] },

{ kind: 'ipUa', rate: [3, '15m'] },

],

});

const unsavedUploadCleaner = objectStorage.createUnsavedUploadCleaner({

jobDelaySeconds: EXPIRE_SECONDS,

getStoredUrl: async ({ userId }) => (await db.user.get({ userId }))?.avatar?.url,

});

const getSignedAvatarUploadUrl: RequestHandler = async (event) => {

const { locals } = event;

const { user } = await locals.seshHandler.userOrRedirect();

const rateCheck = await uploadLimiter.check(event, { log: { userId: user.id } });

if (rateCheck.forbidden) return jsonFail(403);

if (rateCheck.limiterKind === 'global')

return jsonFail(

429,

`This demo has hit its 24h max. Please try again in ${toHumanReadableTime(rateCheck.retryAfterSec)}`,

);

if (rateCheck.limited) return jsonFail(429, rateCheck.humanTryAfter('uploads'));

const key = objectStorage.keyController.create.user.avatar({ userId: user.id });

const res = await objectStorage.generateUploadFormDataFields({

key,

maxContentLength: MAX_UPLOAD_SIZE,

expireSeconds: EXPIRE_SECONDS,

});

if (!res) return jsonFail(500, 'Failed to generate upload URL');

await db.presigned.insertOrOverwrite({

url: objectStorage.keyController.transform.keyToObjectUrl(key),

userId: user.id,

key,

});

unsavedUploadCleaner.addDelayedJob({

cdnUrl: objectStorage.keyController.transform.keyToCDNUrl(key),

userId: user.id,

});

return jsonOk<GetSignedAvatarUploadUrlRes>({ url: res.url, formDataFields: res.formDataFields, objectKey: key });

};

const checkAndSaveUploadedAvatar: RequestHandler = async ({ request, locals }) => {

const { user } = await locals.seshHandler.userOrRedirect();

const body = await parseReqJson(request, checkAndSaveUploadedAvatarReqSchema);

if (!body.success) return jsonFail(400);

const presignedObjectUrl = await db.presigned.get({ userId: user.id });

if (!presignedObjectUrl) return jsonFail(400);

const cdnUrl = objectStorage.keyController.transform.objectUrlToCDNUrl(presignedObjectUrl.url);

const imageExists = await fetch(cdnUrl, { method: 'HEAD' }).then((res) => res.ok);

if (!imageExists) {

await db.presigned.delete({ userId: user.id });

unsavedUploadCleaner.removeJob({ cdnUrl });

return jsonFail(400);

}

const newAvatar = { crop: body.data.crop, url: cdnUrl };

const oldAvatar = user.avatar;

const newKey = objectStorage.keyController.transform.objectUrlToKey(presignedObjectUrl.url);

const { error: moderationError } = await objectStorage.detectModerationLabels({ s3Key: newKey });

if (moderationError) {

unsavedUploadCleaner.removeJob({ cdnUrl });

await Promise.all([objectStorage.delete({ key: newKey, guard: null }), db.presigned.delete({ userId: user.id })]);

return jsonFail(422, moderationError.message);

}

if (objectStorage.keyController.is.cdnUrl(oldAvatar?.url) && newAvatar.url !== oldAvatar.url) {

const oldKey = objectStorage.keyController.transform.cdnUrlToKey(oldAvatar.url);

await Promise.all([

objectStorage.delete({

key: oldKey,

guard: () => objectStorage.keyController.guard.user.avatar({ key: oldKey, ownerId: user.id }),

}),

objectStorage.invalidateCDN({ keys: [oldKey] }),

]);

}

unsavedUploadCleaner.removeJob({ cdnUrl });

await Promise.all([

db.presigned.delete({ userId: user.id }),

db.user.update({ userId: user.id, values: { avatar: newAvatar } }),

]);

return jsonOk<CheckAndSaveUploadedAvatarRes>({ savedImg: newAvatar });

};

const deleteAvatar: RequestHandler = async ({ locals }) => {

const { user } = await locals.seshHandler.userOrRedirect();

if (!user.avatar) return jsonFail(404, 'No avatar to delete');

const promises: Array<Promise<unknown>> = [db.user.update({ userId: user.id, values: { avatar: null } })];

if (objectStorage.keyController.is.cdnUrl(user.avatar.url)) {

const key = objectStorage.keyController.transform.cdnUrlToKey(user.avatar.url);

promises.push(

objectStorage.delete({

key,

guard: () => objectStorage.keyController.guard.user.avatar({ key, ownerId: user.id }),

}),

objectStorage.invalidateCDN({ keys: [key] }),

);

}

await Promise.all(promises);

return jsonOk<DeleteAvatarRes>({ message: 'Success' });

};

export const GET = getSignedAvatarUploadUrl;

export const PUT = checkAndSaveUploadedAvatar;

export const DELETE = deleteAvatar;Let's allow our users to upload an image. We'll use an avatar as an example. This simple feature will touch a lot of topics:

Infrastructure

- AWS S3 (image storage)

- AWS Cloudfront (distribution)

- AWS Rekognition (content moderation)

- AWS IAM (security)

- PostgreSQL with Drizzle ORM (to store the user and their avatar)

- Redis (rate limiting)

Client Features

- Upload state machine controller with Svelte

$state() - Crop, file select, upload, and delete capabilities

- Graceful interruption and cancellation handling

- User friendly errors

- Size and file type guards

- File upload progress bar

- Images loaded from CDN

- Upload state machine controller with Svelte

Server Guards

- User authorization

- Rate limits

- Explicit content deletion

- Image storage access authorization

- Size and file type limits

- Zero trust client relationship

Of course, there are many alternatives to each infrastructure choice. For example, ImageKit could be used as the image storage and CDN, an in-memory cache such as ttlcache could be used as the kv store, Google Cloud Vision could be used for content moderation, and so on. To keep it simple, we'll use AWS for everything except the database and kv store, which will be hosted directly on the server.

pnpm dev:up script in this repo's package.json to create the necessary database and kv docker containers.Upload Flow Options

The basic flow is simple. Get a file input from the user, upload it to storage, and save a link in a database.

However, there are multiple possible implementation variants to our flow. There are also security features or UI enhancements we'll want to implement. First, let's evaluate a few different ways our basic flow could be implemented so we can choose one.

0.1 Server Heavy

The Flow

- The client uploads a file to the server.

- The server checks user authorization, rate limits the request, checks the file size, and checks the content

moderation with

Rekognition. - The server uploads the file to an

S3bucket. - The server saves the file url into the database.

- In order to show the client the progress that has been made, the server pushes server-sent events with a connection

made with

ReadableStreamandEventSource.

Pros

- Simple to make safe. The server has complete control.

Cons

- Slow. The file has to make two trips (from client to server to AWS).

- Memory inefficient. The server has to hold the file in memory during the entire pipeline.

0.2 With Webhook

The Flow

- The server checks user authorization, rate limits the request, generates an upload token with a predetermined size, and sends the presigned url to the client.

- The client uses the token to upload the file to an

S3bucket. - An AWS event notification notifies the server that a file has been created.

- The server validates the image with

Rekognition(and deletes it if it fails validation) before saving the url to the database.

Pros

- Memory efficient. The file is never on the server.

- Simple to make safe. We rely on AWS, not the client to notify the server between steps.

Cons

- Variable speed. Event notifications can take over a minute.

- Higher complexity. Involves setting up an event notification with a lambda that POSTs the event notification data to our server.

- Harder to show the client update progress. If the updates were sent via a long lived connection (server-sent events or websockets) with an app scaled to multiple instances, the client request and the AWS notification might hit different instances. The client would have to poll the server or an adapter would be necessary to broadcast the event to the correct app instance.

0.3 Guarded Client Control

The Flow

- The server checks user authorization, rate limits the request, generates an upload token with a predetermined size, stores the presigned url, creates a cleanup job, and sends the presigned url to the client.

- The client uses the token to upload the file to an

S3bucket. - The client notifies the server that the image has been uploaded. The server confirms the image has truly been

uploaded to the presigned url location, validates the image with

Rekognition, removes the cleanup job, saves the url to the database, and returns the response to the client. - If the client uploads to the url but doesn't notify us (either due to malicious intent or via a canceled/interrupted request), the cleanup job will delete it.

Pros

- Fast. The file goes directly from the client to AWS.

- Memory efficient. The file is never on the server.

- Easy to show progress. We can use three distinct http requests and the upload can use an

XMLHttpRequestwithreq.upload.addEventListener('progress', cb).

Cons

- Moderate complexity to make safe. We have to store knowledge of the presigned url request and also add a cleanup job to thwart malicious actors.

Choice

This article implements the guarded client control method. It provides the best client experience (fastest, most visible progress), incurs minimal strain on the server, avoids extra AWS complexity, and isn't too hard to protect.

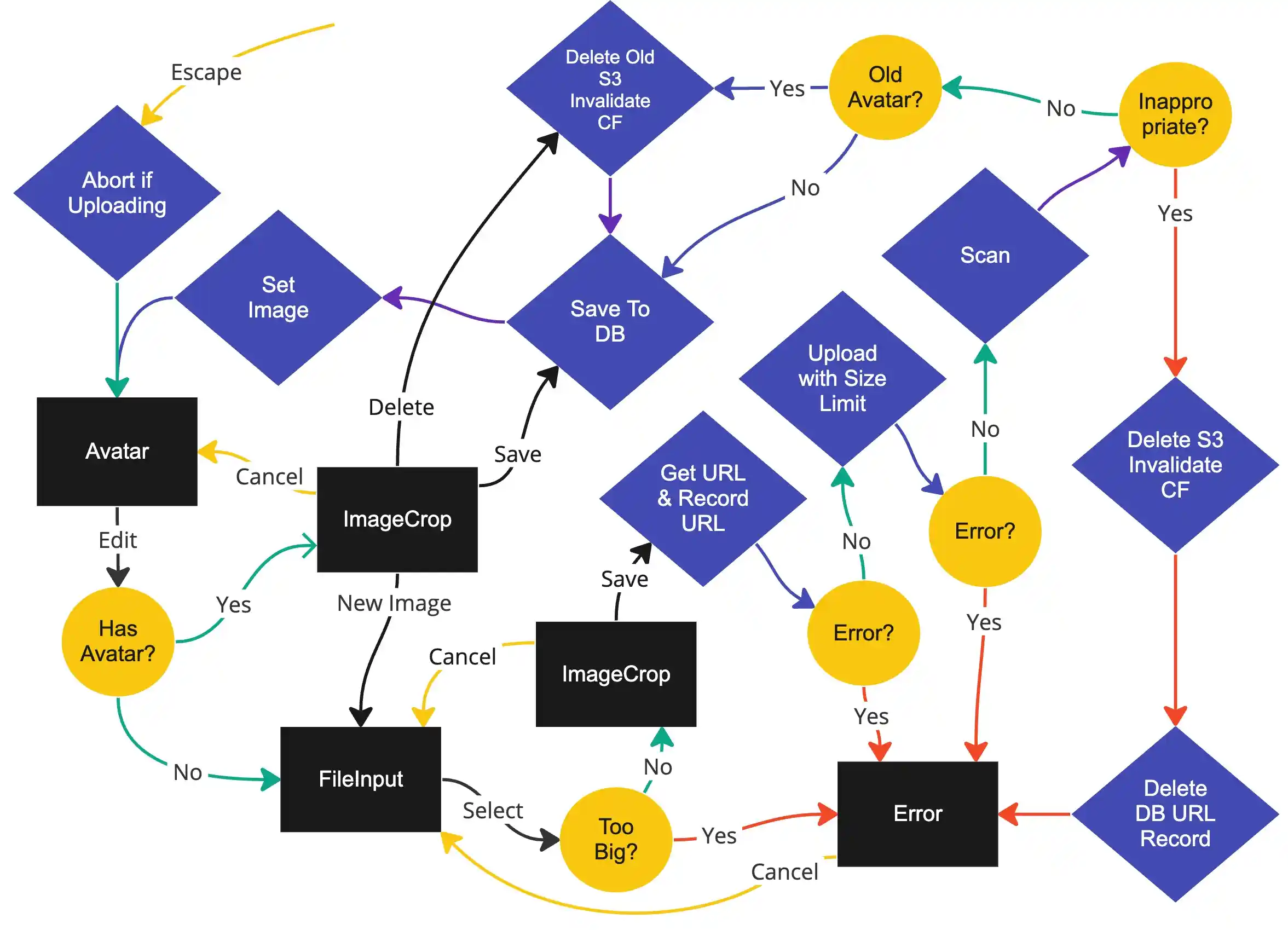

If we try to view the server, client, and services all at once, we end up with something a hard to reason with (rate limiting and presigned url storage not shown):

By focusing on client, AWS, and server separately, the flow become very easy to reason about. We'll begin with the client. Separating the logic from the UI and breaking it down into finite states will make it trivial to understand.

State Controller

As usual, we'll start by defining some Types and Interfaces that will simplify the

implementation.

Crop Types

One of our requirements is that the user should be able to crop their image. We'll do a CSS crop so we

don't have to worry about modifying the image. This website uses @samplekit/svelte-crop-window which is a loving rune/builder based rewrite of sabine/svelte-crop-window. This package will

handle the cropping logic for us, and we'll simply store that data alongside the url.

import { z } from 'zod';

export const cropSchema = z

.object({

position: z.object({ x: z.number(), y: z.number() }),

aspect: z.number(),

rotation: z.number(),

scale: z.number(),

})

.default({ position: { x: 0, y: 0 }, aspect: 1, rotation: 0, scale: 1 });

export type CropValue = z.infer<typeof cropSchema>;

export const croppedImgSchema = z.object({

url: z.string().url(),

crop: cropSchema,

});

export type CroppedImg = z.infer<typeof croppedImgSchema>;States

We need to break the flow into separate states. That could be done in many ways, but I'm going to break them along the following lines.

| State Change Breakpoint | Reasoning |

|---|---|

| There's a decision branch | Allow for easily composable pipelines |

| An async function is being awaited | Allow cancellation logic to be added to the state so a cancel function can handle cancels that occurred during await promise |

| Something must be shown in the UI | Allow getting user input, showing spinners, updating progress bars, etc. |

Static

We need some static states:

export type Idle = { state: 'idle' }; // entry state

export type Err = {

state: 'error';

img: { url: string | null; crop: CropValue | null };

errorMsgs: [string, string] | [string, null]; // [title, body]

};

export type Completed = { state: 'completed'; savedImg: CroppedImg | null };Preexisting

If the user has an avatar already, we'll want to allow them to crop it, save the updated crop value, and delete it.

export type CroppingPreexisting = {

state: 'cropping_preexisting'; // entry state

url: string;

crop: CropValue;

/**

* Update a preexisting image's crop value.

* States: 'cropping_preexisting' -> 'db_updating_preexisting' -> 'completed'

*/

saveCropValToDb({ crop }: { crop: CropValue }): Promise<Idle | Err | Completed>;

/**

* Delete a preexisting image.

* States: 'cropping_preexisting' -> 'deleting_preexisting' -> 'completed'

*/

deleteImg: () => Promise<Idle | Err | Completed>;

};

export type DbUpdatingPreexisting = {

state: 'db_updating_preexisting';

url: string;

crop: CropValue;

updateDbPromise: Promise<Result<{ savedImg: CroppedImg | null }>>;

};

export type DeletingPreexisting = {

state: 'deleting_preexisting';

url: string;

crop: CropValue;

deletePreexistingImgPromise: Promise<Result<Result.Success>>;

};New

If the user doesn't have an image, or if they are selecting a new image, we'll need states to facilitate selecting the file, loading it into memory, cropping it, getting the presigned url, sending it to AWS, and saving the url to the DB.

import type { Tweened } from 'svelte/motion';

export type FileSelecting = {

state: 'file_selecting'; // entry state

/**

* Convert a file into an in memory uri, guarding max file size.

* States: 'file_selecting' -> 'uri_loading' -> 'uri_loaded'

*/

loadFiles: (a: { files: File[]; MAX_UPLOAD_SIZE: number }) => Promise<Idle | Err | UriLoaded>;

};

export type UriLoading = {

state: 'uri_loading';

file: File;

uriPromise: Promise<{ uri: string; error?: never } | { uri?: never; error: Error | DOMException }>;

};

export type UriLoaded = {

state: 'uri_loaded';

file: File;

uri: string;

/** States: 'uri_loaded' -> 'cropped' */

skipCrop: (a?: { crop: CropValue }) => Cropped;

/** States: 'uri_loaded' -> 'cropping' */

startCrop: () => Cropping;

};

export type Cropping = {

state: 'cropping';

file: File;

uri: string;

/** States: 'cropping' -> 'cropped' */

loadCropValue: ({ crop }: { crop: CropValue }) => Cropped;

};

export type Cropped = {

state: 'cropped';

file: File;

uri: string;

crop: CropValue;

/** States: 'cropped' -> 'upload_url_fetching' -> 'image_storage_uploading' -> 'db_saving' -> 'completed' */

uploadCropped(): Promise<Idle | Err | Completed>;

};

export type UploadUrlFetching = {

state: 'upload_url_fetching';

file: File;

uri: string;

crop: CropValue;

uploadProgress: Tweened<number>;

getUploadArgsPromise: Promise<Result<{ url: string; formDataFields: Record<string, string> }>>;

};

export type ImageStorageUploading = {

state: 'image_storage_uploading';

uri: string;

crop: CropValue;

imageUploadPromise: Promise<Result<{ status: number }>>;

uploadProgress: Tweened<number>;

};

export type DbSaving = {

state: 'db_saving';

uri: string;

crop: CropValue;

saveToDbPromise: Promise<Result<{ savedImg: CroppedImg | null }>>;

uploadProgress: Tweened<number>;

};Union

Putting it all together, the controller has the following states:

export type CropControllerState =

| Idle

| Err

| Completed

| CroppingPreexisting

| DbUpdatingPreexisting

| DeletingPreexisting

| FileSelecting

| UriLoading

| UriLoaded

| Cropping

| Cropped

| UploadUrlFetching

| ImageStorageUploading

| DbSaving;

export type StateName = CropControllerState['state'];Callback Types

Notice that each of the three state groups has a logical entry point. We start with the Idle state. If

the user already has an avatar, we can then transition to the CroppingPreexisting state. Otherwise, we

can use the FileSelecting state.

Some states have methods which can be used to transition to other states. Others don't because they are simply transitional UI states in the pipeline that don't need user interaction.

In order to start implementing those states, we will need to accept some callbacks in our constructor.

import type { CroppedImg, CropValue } from '$lib/image/common';

import type { Result } from '$lib/utils/common';

type GetUploadArgs = () => Promise<Result<{ url: string; formDataFields: Record<string, string> }>>;

type Upload = (a: { url: string; formData: FormData; uploadProgress: { tweened: Tweened<number>; scale: number } }) => {

promise: Promise<Result<{ status: number }>>;

abort: () => void;

};

type SaveToDb = (a: { crop: CropValue }) => Promise<Result<{ savedImg: CroppedImg | null }>>;

type DeletePreexistingImg = () => Promise<Result<Result.Success>>;

type SaveCropToDb = (a: { crop: CropValue }) => Promise<Result<{ savedImg: CroppedImg | null }>>;Implementation

Enough with the types – let's implement! We'll obviously need to store our state and callbacks. Additionally, if the

user exits in the middle of an async function, we'll want to be able to gracefully shut down, so let's also add a

cleanup method for that. Finally, we'll derive isIdle for convenience.

Idle Entrypoint

Our trivial idle entrypoint is the default state of the controller.

export class CropImgUploadController {

#state: CropControllerState = $state.raw({ state: 'idle' });

#isIdle: boolean = $derived(this.#state.state === 'idle');

#cleanup: null | (() => void) = null;

#getUploadArgs: GetUploadArgs;

#upload: Upload;

#saveToDb: SaveToDb;

#delImg: DeletePreexistingImg;

#saveCropToDb: SaveCropToDb;

get value() {

return this.#state;

}

/** Clean up and move to 'idle' */

toIdle = async (): Promise<Idle> => {

this.#cleanup?.();

return (this.#state = { state: 'idle' });

};

constructor(a: {

getUploadArgs: GetUploadArgs;

upload: Upload;

saveToDb: SaveToDb;

delImg: DeletePreexistingImg;

saveCropToDb: SaveCropToDb;

}) {

this.#getUploadArgs = a.getUploadArgs;

this.#upload = a.upload;

this.#saveToDb = a.saveToDb;

this.#delImg = a.delImg;

this.#saveCropToDb = a.saveCropToDb;

$effect(() => this.toIdle);

}

}Preexisting Entrypoint

Moving on to our second entrypoint – CroppingPreexisting:

export class CropImgUploadController {

...

/** Clean up and move to 'cropping_preexisting' */

toCropPreexisting = ({ crop, url }: { crop: CropValue; url: string }) => {

this.#cleanup?.();

this.#state = {

state: 'cropping_preexisting',

crop,

url,

saveCropValToDb: ({ crop }) => this.#saveCropValToDb({ crop, url }),

deleteImg: () => this.#deleteImg({ crop, url }),

};

};

#saveCropValToDb = async ({ crop, url }: { crop: CropValue; url: string }): Promise<Idle | Err | Completed> => {

const updateDbPromise = this.#saveCropToDb({ crop });

this.#state = { state: 'db_updating_preexisting', updateDbPromise, crop, url };

const { data, error } = await updateDbPromise;

if (this.#isIdle) return { state: 'idle' };

else if (error) return (this.#state = { state: 'error', img: { url, crop }, errorMsgs: [error.message, null] });

else return (this.#state = { state: 'completed', savedImg: data.savedImg });

};

#deleteImg = async ({ crop, url }: { crop: CropValue; url: string }): Promise<Idle | Err | Completed> => {

const deletePreexistingImgPromise = this.#delImg();

this.#state = { state: 'deleting_preexisting', deletePreexistingImgPromise, crop, url };

const { error } = await deletePreexistingImgPromise;

if (this.#isIdle) return { state: 'idle' };

else if (error) return (this.#state = { state: 'error', img: { url, crop }, errorMsgs: [error.message, null] });

else return (this.#state = { state: 'completed', savedImg: null });

};

}New Entrypoint

We only have one more entry point to implement.

export class CropImgUploadController {

...

/** Clean up and move to 'file_selecting' */

toFileSelect = () => {

this.#cleanup?.();

this.#state = { state: 'file_selecting', loadFiles: this.#loadFiles };

};

}In #loadFiles we load the file, turn it into a data uri, and transition to uri_loaded. That

state has two transition methods to either start cropping or simply use the default crop value.

import { defaultCropValue, fileToDataUrl, humanReadableFileSize } from '$lib/image/client';

export class CropImgUploadController {

...

#loadFiles = async ({

files,

MAX_UPLOAD_SIZE,

}: {

files: File[];

MAX_UPLOAD_SIZE: number;

}): Promise<Idle | Err | UriLoaded> => {

const file = files[0];

if (!file) {

return (this.#state = { state: 'error', img: { url: null, crop: null }, errorMsgs: ['No file selected', null] });

}

if (file.size > MAX_UPLOAD_SIZE) {

return (this.#state = {

state: 'error',

img: { url: null, crop: null },

errorMsgs: [

`File size (${humanReadableFileSize(file.size)}) must be less than ${humanReadableFileSize(MAX_UPLOAD_SIZE)}`,

null,

],

});

}

const uriPromise = fileToDataUrl(file);

this.#state = { state: 'uri_loading', file, uriPromise };

const { uri } = await uriPromise;

if (this.#isIdle) return { state: 'idle' };

if (!uri) {

return (this.#state = {

state: 'error',

img: { url: null, crop: null },

errorMsgs: ['Error reading file', null],

});

}

return (this.#state = {

state: 'uri_loaded',

file,

uri,

skipCrop: ({ crop } = { crop: defaultCropValue }) => this.#toCropped({ crop, file, uri }),

startCrop: () =>

(this.#state = {

state: 'cropping',

file,

uri,

loadCropValue: ({ crop }) => this.#toCropped({ crop, file, uri }),

}),

});

};

#toCropped = ({ crop, file, uri }: { crop: CropValue; file: File; uri: string }): Cropped => {

return (this.#state = {

state: 'cropped',

crop,

file,

uri,

uploadCropped: async () => this.#uploadPipeline({ crop, file, uri }),

});

};

}export const fileToDataUrl = (

blob: Blob,

): Promise<{ uri: string; error?: never } | { uri?: never; error: Error | DOMException }> => {

return new Promise((resolve) => {

const reader = new FileReader();

reader.onload = (_e) => resolve({ uri: reader.result as string });

reader.onerror = (_e) => resolve({ error: reader.error ?? new Error('Unknown error') });

reader.onabort = (_e) => resolve({ error: new Error('Read aborted') });

reader.readAsDataURL(blob);

});

};

export const humanReadableFileSize = (size: number) => {

if (size < 1024) return `${size}B`;

if (size < 1024 * 1024) return `${(size / 1024).toPrecision(2)}kB`;

return `${(size / (1024 * 1024)).toPrecision(2)}MB`;

};Finally we are at our last method. It's also the largest. In #uploadPipeline we will get the upload url, upload

the file, and save it to the DB.

import { circOut } from 'svelte/easing';

import { tweened, type Tweened } from 'svelte/motion';

export class CropImgUploadController {

...

#uploadPipeline = async ({

file,

uri,

crop,

}: {

file: File;

uri: string;

crop: CropValue;

}): Promise<Idle | Err | Completed> => {

/** Get the upload url from our server (progress 3-10%) */

const uploadProgress = tweened(0, { easing: circOut });

const getUploadArgsPromise = this.#getUploadArgs();

uploadProgress.set(3);

this.#state = { state: 'upload_url_fetching', file, uri, crop, uploadProgress, getUploadArgsPromise };

const getUploadArgsPromised = await getUploadArgsPromise;

if (this.#isIdle) return { state: 'idle' };

if (getUploadArgsPromised.error) {

return (this.#state = {

state: 'error',

img: { url: uri, crop },

errorMsgs: [getUploadArgsPromised.error.message, null],

});

}

uploadProgress.set(10);

const { url, formDataFields } = getUploadArgsPromised.data;

const formData = new FormData();

for (const [key, value] of Object.entries(formDataFields)) {

formData.append(key, value);

}

formData.append('file', file);

/** Upload file to image storage (progress 10-90%) */

const { abort: abortUpload, promise: imageUploadPromise } = this.#upload({

url,

formData,

uploadProgress: { tweened: uploadProgress, scale: 0.9 },

});

this.#cleanup = () => abortUpload();

this.#state = {

state: 'image_storage_uploading',

crop,

uri,

imageUploadPromise,

uploadProgress,

};

const imageUploadPromised = await imageUploadPromise;

this.#cleanup = null;

if (this.#isIdle) return { state: 'idle' };

if (imageUploadPromised.error) {

return (this.#state = {

state: 'error',

img: { url: uri, crop },

errorMsgs: [imageUploadPromised.error.message, null],

});

}

/** Save url to db (progress 90-100%) */

const interval = setInterval(() => {

uploadProgress.update((v) => {

const newProgress = Math.min(v + 1, 100);

if (newProgress === 100) clearInterval(interval);

return newProgress;

});

}, 20);

const saveToDbPromise = this.#saveToDb({ crop });

this.#cleanup = () => clearInterval(interval);

this.#state = {

state: 'db_saving',

crop,

uri,

saveToDbPromise,

uploadProgress,

};

const saveToDbPromised = await saveToDbPromise;

this.#cleanup = null;

if (this.#isIdle) return { state: 'idle' };

if (saveToDbPromised.error) {

return (this.#state = {

state: 'error',

img: { url: uri, crop },

errorMsgs: [saveToDbPromised.error.message, null],

});

}

clearInterval(interval);

uploadProgress.set(100);

return (this.#state = { state: 'completed', savedImg: saveToDbPromised.data.savedImg });

};

}And... we're done! But not really. We have some dependency injection going on here. Namely, the five callback functions. Let's finish up our client code with the required UI components and then we'll tackle those callbacks.

UI

Base Components

We'll need a few components to show our UI state. We'll make them mostly dumb components so the logic can be isolated

inside the file where the CropImgUploadController is instantiated.

Avatar Editor

The final UI component will be the controller component AvatarEditor.svelte that orchestrates the UI

components created above using the CropImgUploadController. It receives an avatar from the parent and

passes the changes back up with onCancel and onNewAvatar callbacks. This component lives on

a page behind an auth guard. SampleKit uses its own auth package. There are many other options. For example, Lucia Auth.

<script lang="ts">

import { fade } from 'svelte/transition';

import { FileInput } from '$lib/components';

import { ImageCrop, UploadProgress, ImageCardBtns, ImageCardOverlays } from '$lib/image/components';

import { objectStorage, CropImgUploadController } from '$lib/object-storage/client';

import ConfirmDelAvatarModal from './ConfirmDelAvatarModal.svelte';

import { updateAvatarCrop } from './avatar/crop.json/client';

import { getSignedAvatarUploadUrl, checkAndSaveUploadedAvatar, deleteAvatar } from './avatar/upload.json/client';

import { MAX_UPLOAD_SIZE } from './avatar/upload.json/common';

interface Props {

avatar: DB.User['avatar'];

onNewAvatar: (img: DB.User['avatar']) => void | Promise<void>;

onCancel: () => void;

}

const { avatar, onCancel, onNewAvatar }: Props = $props();

const controller = new CropImgUploadController({

saveCropToDb: updateAvatarCrop.send,

delImg: deleteAvatar.send,

getUploadArgs: getSignedAvatarUploadUrl.send,

upload: objectStorage.upload,

saveToDb: checkAndSaveUploadedAvatar.send,

});

if (avatar) controller.toCropPreexisting(avatar);

else controller.toFileSelect();

const s = $derived(controller.value);

let deleteConfirmationModalOpen = $state(false);

</script>

{#if s.state === 'file_selecting'}

<FileInput

onSelect={async (files: File[]) => {

const erroredCanceledOrLoaded = await s.loadFiles({ files, MAX_UPLOAD_SIZE });

if (erroredCanceledOrLoaded.state === 'uri_loaded') erroredCanceledOrLoaded.startCrop();

}}

accept="image/jpeg, image/png"

/>

{:else}

<div class="relative aspect-square h-80 w-80 sm:h-[32rem] sm:w-[32rem]">

{#if s.state === 'error'}

<ImageCardOverlays

img={{ kind: 'overlay', url: s.img.url, crop: s.img.crop, blur: true }}

overlay={{ red: true }}

{onCancel}

errorMsgs={s.errorMsgs}

/>

{:else if s.state === 'cropping_preexisting'}

<ImageCrop

url={s.url}

crop={s.crop}

onSave={async (crop) => {

const erroredCanceledOrCompleted = await s.saveCropValToDb({ crop });

if (erroredCanceledOrCompleted.state === 'completed') await onNewAvatar(erroredCanceledOrCompleted.savedImg);

}}

/>

<ImageCardBtns {onCancel} onNew={controller.toFileSelect} onDelete={() => (deleteConfirmationModalOpen = true)} />

<ConfirmDelAvatarModal

bind:open={deleteConfirmationModalOpen}

handleDelete={async () => {

const erroredCanceledOrCompleted = await s.deleteImg();

if (erroredCanceledOrCompleted.state === 'completed') await onNewAvatar(erroredCanceledOrCompleted.savedImg);

}}

/>

{:else if s.state === 'db_updating_preexisting'}

<ImageCardOverlays img={{ kind: 'overlay', url: s.url, crop: s.crop }} />

<ImageCardBtns loader />

{:else if s.state === 'deleting_preexisting'}

<ImageCardOverlays img={{ kind: 'overlay', url: s.url, crop: s.crop }} overlay={{ pulsingWhite: true }} />

<ImageCardBtns loader />

{:else if s.state === 'uri_loading'}

<ImageCardOverlays img={{ kind: 'skeleton' }} />

{:else if s.state === 'uri_loaded'}

<ImageCardOverlays img={{ kind: 'overlay', url: s.uri, crop: null }} />

{:else if s.state === 'cropping'}

<ImageCrop

url={s.uri}

onSave={async (crop) => {

const erroredCanceledOrCompleted = await s.loadCropValue({ crop }).uploadCropped();

if (erroredCanceledOrCompleted.state === 'completed') await onNewAvatar(erroredCanceledOrCompleted.savedImg);

}}

/>

<ImageCardBtns {onCancel} onNew={controller.toFileSelect} />

{:else if s.state === 'cropped'}

<ImageCardOverlays img={{ kind: 'overlay', url: s.uri, crop: s.crop }} />

{:else if s.state === 'upload_url_fetching' || s.state === 'image_storage_uploading' || s.state === 'db_saving'}

<ImageCardOverlays img={{ kind: 'overlay', url: s.uri, crop: s.crop }} overlay={{ pulsingWhite: true }} />

<ImageCardBtns loader />

<div out:fade><UploadProgress uploadProgress={s.uploadProgress} /></div>

{:else if s.state === 'completed'}

<ImageCardOverlays img={{ kind: 'full', url: s.savedImg?.url, crop: s.savedImg?.crop }} />

<ImageCardBtns badgeCheck />

{:else if s.state === 'idle'}

<!-- -->

{/if}

</div>

{/if}We've finished the controller and UI, but we still have to implement the five callback functions that the CropImgUploadController requires:

import { objectStorage, CropImgUploadController } from '$lib/object-storage/client';

import { updateAvatarCrop } from './avatar/crop.json/client';

import { getSignedAvatarUploadUrl, checkAndSaveUploadedAvatar, deleteAvatar } from './avatar/upload.json/client';

import { MAX_UPLOAD_SIZE } from './avatar/upload.json/common';Dependency Injection Callbacks

Of the five callbacks, GetUploadArgs, SaveToDb, DeletePreexistingImg, and SaveCropToDb are requests to our own server endpoints. They're implemented with the TypeSafe Fetch Handler we created in a previous article. Upload, however, is a request directly to AWS using the credentials the server sent the client along with

an uploadProgress object that will show the status of the user. Let's tackle that first.

Uploader

The upload function is called by the client to upload directly to the presigned url. In order to keep the uploadProgress in sync with the upload state, we'll use an XMLHttpRequest which supports a progress listener instead of fetch. Because XMLHttpRequest uses listeners and

callbacks, we'll promisify it and split the promise and abort.

Client Endpoints

We're down to just the four callbacks within the two endpoints. These two client files define the typesafe fetch handlers that will correspond to the +server.ts endpoints.

That's the last of the client code, but these two fetch handlers route to unimplemented server endpoints. They will call the AWS SDKs, so we'll set up AWS and then come back to finish the marathon at the endpoints.

AWS

If you don't already have one, you will need to set up an account at aws.amazon.com. We will create four services. S3 will hold the images, Rekognition will moderate explicit content, CloudFront will serve the images, and IAM will manage access.

These services are pay per use and cost cents to test.

However, if they are not properly protected, a malicious user could use them to rack up a large bill.

Be sure to protect your keys, limit access methods, rate limit, and set up billing alerts.

Install AWS SDKs

All of the AWS code will use the official client SDKs.

"dependencies": {

"@aws-sdk/client-cloudfront": "^3.474.0",

"@aws-sdk/client-rekognition": "^3.474.0",

"@aws-sdk/client-s3": "^3.474.0",

"@aws-sdk/s3-presigned-post": "^3.478.0",

}Using the SDKs, a client is created and then commands are sent with the client. Here are the commands we'll be using.

import { CloudFrontClient, CreateInvalidationCommand } from '@aws-sdk/client-cloudfront';

import { RekognitionClient, DetectModerationLabelsCommand } from '@aws-sdk/client-rekognition';

import { S3Client, DeleteObjectCommand } from '@aws-sdk/client-s3';

import { createPresignedPost } from '@aws-sdk/s3-presigned-post';We need to enable the services on AWS, and then configure their permissions with an AWS IAM policy.

Enable Services

Creating the S3 Bucket

Choose "create bucket" on the S3 dashboard and create a new bucket with a unique name (for example the name of your app) and all the default settings.

Edit the CORS configuration so the client can POST directly to the AWS bucket. "Block all public

access" will prevent users from uploading directly without a presigned key.

[

{

"AllowedHeaders": ["*"],

"AllowedMethods": ["POST"],

"AllowedOrigins": ["*"],

"ExposeHeaders": []

}

]Creating the IAM Policy

At IAM, create a new user – naming it the same as your app will make it easy to remember. Use all default settings.

Create a policy that will allow creating and deleting images in the bucket.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "s3",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:DeleteObject"

],

"Resource": [

"arn:aws:s3:::samplekit",

"arn:aws:s3:::samplekit/*"

]

}

]

}Click "Next", give it a policy name (again I've used the name of my app), and hit "Create Policy". Done! Now we have permission to create and delete objects in the bucket.

Creating the Cloudfront Distribution

Choose "Create Distribution" at AWS Cloudfront.

- Origin Domain: Choose S3 bucket

- Origin Access -> Origin access control settings -> Choose Bucket Name

- Redirect HTTP to HTTPS: Yes

- Do not enable security protections (WAF is not pay per use)

After finishing with "Create distribution", you will be given a bucket policy. Copy this into your S3 bucket: Select Bucket -> Permissions -> Bucket Policy.

Add Cloudfront to the IAM Policy

When an S3 object is deleted, the Cloudfront cache is not automatically invalidated. We'll do that manually, and

therefore cloudfront:CreateInvalidation permissions will need to be granted.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "s3",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:DeleteObject"

],

"Resource": [

"arn:aws:s3:::samplekit",

"arn:aws:s3:::samplekit/*"

]

},

{

"Sid": "cloudfront",

"Effect": "Allow",

"Action": [

"cloudfront:CreateInvalidation"

],

"Resource": [

"arn:aws:cloudfront::069636842578:distribution/*"

]

}

]

}Add Rekognition to the IAM Policy

We will grant rekognition:DetectModerationLabels permissions, but also s3:GetObject so Rekognition is able

to detect directly from the S3 bucket.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "s3",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:DeleteObject",

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::samplekit",

"arn:aws:s3:::samplekit/*"

]

},

{

"Sid": "cloudfront",

"Effect": "Allow",

"Action": [

"cloudfront:CreateInvalidation"

],

"Resource": [

"arn:aws:cloudfront::069636842578:distribution/*"

]

},

{

"Sid": "rekognition",

"Effect": "Allow",

"Action": [

"rekognition:DetectModerationLabels"

],

"Resource": [

"*"

]

}

]

}Let's create an IAM access token and add our environmental variables.

AWS_SERVICE_REGION="" # for example: us-east-1

# https://console.aws.amazon.com/iam/home -> Select User -> Security credentials -> Create access key -> Copy access key and secret

IAM_ACCESS_KEY_ID=""

IAM_SECRET_ACCESS_KEY=""

S3_BUCKET_NAME="" # The name of the bucket you created

S3_BUCKET_URL="" # https://[bucket-name].s3.[region].amazonaws.com

# https://console.aws.amazon.com/cloudfront -> Select distribution

CLOUDFRONT_URL="" # Copy Distribution domain name (including https://)

CLOUDFRONT_DISTRIBUTION_ID="" # Copy ARN – the last digits after the final "/"AWS Server Code

AWS SDKs

AWS has been configured on our account and we are ready to use them with the SDKs. The clients are wrapped in getters so they are not created during the app build.

Helpers

We need some way to organize the S3 files. We could add meta tags, but it becomes cumbersome because S3 doesn't have a way to perform CRUD actions based on the tags without intermediary requests. Instead we'll silo the files into "folders" by manipulating the key. That way, we can download the entire dev branch, delete all of a user's images, etc. Creating keys by hand on each call would be messy, so let's make a key controller.

import crypto from 'crypto';

import { CLOUDFRONT_URL, DB_NAME, S3_BUCKET_URL } from '$env/static/private';

import type { ObjectStorage } from './types';

const createId = ({ length }: { length: number } = { length: 16 }) =>

crypto

.randomBytes(Math.ceil(length / 2))

.toString('base64url')

.slice(0, length);

export const s3CloudfrontKeyController: ObjectStorage['keyController'] = (() => {

const keys = {

user: 'user',

avatar: 'avatar',

};

const transform = {

keyToObjectUrl: (key: string) => `${S3_BUCKET_URL}/${key}`,

keyToCDNUrl: (key: string) => `${CLOUDFRONT_URL}/${key}`,

objectUrlToKey: (url: string) => url.replace(`${S3_BUCKET_URL}/`, ''),

objectUrlToCDNUrl: (url: string) => url.replace(S3_BUCKET_URL, CLOUDFRONT_URL),

cdnUrlToKey: (url: string) => url.replace(`${CLOUDFRONT_URL}/`, ''),

cdnUrlToObjectUrl: (url: string) => url.replace(CLOUDFRONT_URL, S3_BUCKET_URL),

};

const is = {

objectUrl: (url?: string): url is string => !!url?.startsWith(S3_BUCKET_URL),

cdnUrl: (url?: string): url is string => !!url?.startsWith(CLOUDFRONT_URL),

};

const create = {

root: () => `${DB_NAME}/`,

user: {

avatar: ({ userId }: { userId: string }) => `${DB_NAME}/${keys.user}/${userId}/${keys.avatar}/${createId()}`,

},

};

const parse = {

root: (key: string) => {

const [dbName] = key.split('/');

if (!dbName) return null;

return { dbName };

},

user: {

avatar: (key: string) => {

const [dbName, userKey, userId, avatarKey, id] = key.split('/');

if (!dbName || !userId || !id) return null;

if (userKey !== keys.user || avatarKey !== keys.avatar) return null;

return { userId, id, dbName };

},

},

};

const guard = {

root: ({ key }: { key: string }) => {

const parsed = parse.root(key);

if (!parsed) return false;

return parsed.dbName === DB_NAME;

},

user: {

avatar: ({ key, ownerId }: { key: string; ownerId: string }): boolean => {

const parsed = parse.user.avatar(key);

if (!parsed) return false;

return parsed.dbName === DB_NAME && parsed.userId === ownerId;

},

},

};

return {

transform,

is,

create,

parse,

guard,

};

})();Lastly, we saw in our flow that we will need to create some kind of job that will remove any images that weren't uploaded within a certain time. Let's implement that.

import { logger } from '$lib/logging/server';

import { invalidateCloudfront } from './cloudfront';

import { deleteS3Object } from './s3';

import { s3CloudfrontKeyController } from './s3CloudfrontKeyController';

import type { ObjectStorage } from './types';

/**

* Removes any images that were uploaded but haven't been stored within `jobDelaySeconds`.

*

* This is useful to protect against a client not notifying the server after an upload to a presigned url.

*/

export const createUnsavedUploadCleaner: ObjectStorage['createUnsavedUploadCleaner'] = ({

getStoredUrl,

jobDelaySeconds,

}: {

getStoredUrl: ({ userId }: { userId: string }) => Promise<string | undefined | null>;

jobDelaySeconds: number;

}) => {

const timeouts = new Map<string, ReturnType<typeof setTimeout>>();

const rmUnusedImage = async ({ cdnUrl, userId }: { cdnUrl: string; userId: string }) => {

const imageExists = await fetch(cdnUrl, { method: 'HEAD' }).then((res) => res.ok);

if (!imageExists) return;

const storedUrl = await getStoredUrl({ userId });

if (storedUrl === cdnUrl) return;

const key = s3CloudfrontKeyController.transform.cdnUrlToKey(cdnUrl);

await Promise.all([deleteS3Object({ key, guard: null }), invalidateCloudfront({ keys: [key] })]);

logger.info(`Deleted unsaved image for user ${userId} at ${cdnUrl}`);

};

const addDelayedJob = ({ cdnUrl, userId }: { cdnUrl: string; userId: string }) => {

const timeout = setTimeout(async () => {

await rmUnusedImage({ cdnUrl, userId });

timeouts.delete(cdnUrl);

}, jobDelaySeconds * 1000);

timeouts.set(cdnUrl, timeout);

};

const removeJob = ({ cdnUrl }: { cdnUrl: string }) => {

const timeout = timeouts.get(cdnUrl);

if (timeout) {

clearTimeout(timeout);

timeouts.delete(cdnUrl);

}

};

return { addDelayedJob, removeJob };

};Server Endpoints

The last piece of the puzzle is to implement the two server endpoints: crop.json and upload.json.

Crop

crop.json/+server.ts is easy because we're just updating the crop value. Let's get that out of the way.

import { db } from '$lib/db/server';

import { jsonFail, jsonOk, parseReqJson } from '$lib/http/server';

import { croppedImgSchema } from '$lib/image/common';

import type { UpdateAvatarCropRes } from './common';

import type { RequestHandler } from '@sveltejs/kit';

const updateAvatarCrop: RequestHandler = async ({ locals, request }) => {

const { user } = await locals.seshHandler.userOrRedirect();

if (!user.avatar) return jsonFail(400, 'No avatar to crop');

const body = await parseReqJson(request, croppedImgSchema, { overrides: { url: user.avatar.url } });

if (!body.success) return jsonFail(400);

const avatar = body.data;

await db.user.update({ userId: user.id, values: { avatar } });

return jsonOk<UpdateAvatarCropRes>({ savedImg: avatar });

};

export const PUT = updateAvatarCrop;Upload

For the uploader, we require a rate limiter and some way to save the presigned urls.

Rate Limiter

SampleKit uses its own rate limiter around a Redis client so that multiple instances of the app can be deployed. If that's not a consideration, a good in-memory rate limiter is sveltekit-rate-limiter, a package created by the author of sveltekit-superforms.

Signed URL Storage

The presigned urls are stored in the database.

Endpoint

We can now implement the last endpoint, and with it, complete the entire feature.

import { db } from '$lib/db/server';

import { jsonFail, jsonOk, parseReqJson } from '$lib/http/server';

import { objectStorage } from '$lib/object-storage/server';

import { createLimiter } from '$lib/rate-limit/server';

import { toHumanReadableTime } from '$lib/utils/common';

import {

MAX_UPLOAD_SIZE,

checkAndSaveUploadedAvatarReqSchema,

type DeleteAvatarRes,

type CheckAndSaveUploadedAvatarRes,

type GetSignedAvatarUploadUrlRes,

} from './common';

import type { RequestHandler } from '@sveltejs/kit';

// generateS3UploadPost enforces max upload size and denies any upload that we don't sign

// uploadLimiter rate limits the number of uploads a user can do

// presigned ensures we don't have to trust the client to tell us what the uploaded objectUrl is after the upload

// unsavedUploadCleaner ensures that we don't miss cleaning up an object in S3 if the user doesn't notify us of the upload

// detectModerationLabels prevents explicit content

const EXPIRE_SECONDS = 60;

const uploadLimiter = createLimiter({

id: 'checkAndSaveUploadedAvatar',

limiters: [

{ kind: 'global', rate: [300, 'd'] },

{ kind: 'userId', rate: [2, '15m'] },

{ kind: 'ipUa', rate: [3, '15m'] },

],

});

const unsavedUploadCleaner = objectStorage.createUnsavedUploadCleaner({

jobDelaySeconds: EXPIRE_SECONDS,

getStoredUrl: async ({ userId }) => (await db.user.get({ userId }))?.avatar?.url,

});

const getSignedAvatarUploadUrl: RequestHandler = async (event) => {

const { locals } = event;

const { user } = await locals.seshHandler.userOrRedirect();

const rateCheck = await uploadLimiter.check(event, { log: { userId: user.id } });

if (rateCheck.forbidden) return jsonFail(403);

if (rateCheck.limiterKind === 'global')

return jsonFail(

429,

`This demo has hit its 24h max. Please try again in ${toHumanReadableTime(rateCheck.retryAfterSec)}`,

);

if (rateCheck.limited) return jsonFail(429, rateCheck.humanTryAfter('uploads'));

const key = objectStorage.keyController.create.user.avatar({ userId: user.id });

const res = await objectStorage.generateUploadFormDataFields({

key,

maxContentLength: MAX_UPLOAD_SIZE,

expireSeconds: EXPIRE_SECONDS,

});

if (!res) return jsonFail(500, 'Failed to generate upload URL');

await db.presigned.insertOrOverwrite({

url: objectStorage.keyController.transform.keyToObjectUrl(key),

userId: user.id,

key,

});

unsavedUploadCleaner.addDelayedJob({

cdnUrl: objectStorage.keyController.transform.keyToCDNUrl(key),

userId: user.id,

});

return jsonOk<GetSignedAvatarUploadUrlRes>({ url: res.url, formDataFields: res.formDataFields, objectKey: key });

};

const checkAndSaveUploadedAvatar: RequestHandler = async ({ request, locals }) => {

const { user } = await locals.seshHandler.userOrRedirect();

const body = await parseReqJson(request, checkAndSaveUploadedAvatarReqSchema);

if (!body.success) return jsonFail(400);

const presignedObjectUrl = await db.presigned.get({ userId: user.id });

if (!presignedObjectUrl) return jsonFail(400);

const cdnUrl = objectStorage.keyController.transform.objectUrlToCDNUrl(presignedObjectUrl.url);

const imageExists = await fetch(cdnUrl, { method: 'HEAD' }).then((res) => res.ok);

if (!imageExists) {

await db.presigned.delete({ userId: user.id });

unsavedUploadCleaner.removeJob({ cdnUrl });

return jsonFail(400);

}

const newAvatar = { crop: body.data.crop, url: cdnUrl };

const oldAvatar = user.avatar;

const newKey = objectStorage.keyController.transform.objectUrlToKey(presignedObjectUrl.url);

const { error: moderationError } = await objectStorage.detectModerationLabels({ s3Key: newKey });

if (moderationError) {

unsavedUploadCleaner.removeJob({ cdnUrl });

await Promise.all([objectStorage.delete({ key: newKey, guard: null }), db.presigned.delete({ userId: user.id })]);

return jsonFail(422, moderationError.message);

}

if (objectStorage.keyController.is.cdnUrl(oldAvatar?.url) && newAvatar.url !== oldAvatar.url) {

const oldKey = objectStorage.keyController.transform.cdnUrlToKey(oldAvatar.url);

await Promise.all([

objectStorage.delete({

key: oldKey,

guard: () => objectStorage.keyController.guard.user.avatar({ key: oldKey, ownerId: user.id }),

}),

objectStorage.invalidateCDN({ keys: [oldKey] }),

]);

}

unsavedUploadCleaner.removeJob({ cdnUrl });

await Promise.all([

db.presigned.delete({ userId: user.id }),

db.user.update({ userId: user.id, values: { avatar: newAvatar } }),

]);

return jsonOk<CheckAndSaveUploadedAvatarRes>({ savedImg: newAvatar });

};

const deleteAvatar: RequestHandler = async ({ locals }) => {

const { user } = await locals.seshHandler.userOrRedirect();

if (!user.avatar) return jsonFail(404, 'No avatar to delete');

const promises: Array<Promise<unknown>> = [db.user.update({ userId: user.id, values: { avatar: null } })];

if (objectStorage.keyController.is.cdnUrl(user.avatar.url)) {

const key = objectStorage.keyController.transform.cdnUrlToKey(user.avatar.url);

promises.push(

objectStorage.delete({

key,

guard: () => objectStorage.keyController.guard.user.avatar({ key, ownerId: user.id }),

}),

objectStorage.invalidateCDN({ keys: [key] }),

);

}

await Promise.all(promises);

return jsonOk<DeleteAvatarRes>({ message: 'Success' });

};

export const GET = getSignedAvatarUploadUrl;

export const PUT = checkAndSaveUploadedAvatar;

export const DELETE = deleteAvatar;Conclusion

This one covered a lot of ground. I hope it helps you integrate AWS services into your SvelteKit app, provides some ideas for how to handle a state controller, and opens up the possibility of safely allowing users to upload images directly to your S3 bucket. There are of course more features that could be added, such as using image transformations so the client isn't loading a full size image for a tiny avatar. For that, there is an AWS solution (Serverless Image Handler) and a third party way (imagekit.io). No matter which service you choose, the client and server code we've created could just as easily be applied there.

As always, please do share your thoughts over in the GitHub discussions. Until next time, happy coding!